Face Recognition, Expressions and Speech

Contents

Introduction (Top)

This chapter is not just about how we recognise faces, but how the visual system interprets them. Facial recognition is essentially a special form of object recognition, where the human face can tell us whether an individual or individuals are young or old, male or female, healthy or unwell and whether or not the person is happy, sad, frightened, angry or disgusted.

We also can use faces to identify the significant people in our lives, such as our parents, children, friends and spouses, despite changes in lighting, distance or viewing angle.

Face recognition differs from simple recognition as edge features are not as important for faces, because faces tend to have subtle, sloping features. This is seen in the drawing (left) where it is impossible difficult to identify the face from just the silhouette.

Negative images of faces are hard to recognises due to the loss of important cues from relative brightness of the skin and hair, coupled with distorted shadowing around features, even though the spatial features remain unchanged. This can be seen in the image to the right where the negative picture of the man makes it extremely difficult to work out what he actually looks like.

We also know that facial recognition is a holistic process and that the components of a face are much less important than the face as a whole (i.e. a jumbled up face is not as easily identified as a normal face. This is because relationships between features are learned and the more practice the visual system has at looking at faces, the better the facial recognition abilities will become.

Furthermore, faces of our own race and age are easier to recognise and judge as they tend to be more familiar to us. This is likely to be due to people spending the majority of their time with people of their own race and, when in school or work, the majority of colleagues are of the same age group as themselves. This will have a substantial impact on the learning effect and will explain why those of the same race and age are easier to recognise.

Development of Face Perception (Top)

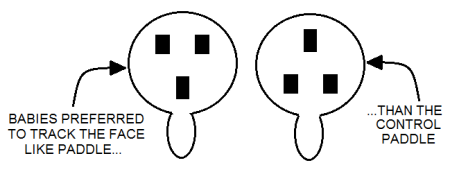

Newborn babies (with an average age of just 9 minutes) prefer to track face-like patterns than control patterns (diagram below). This shows that humans have an innate preference for face-like patterns. Therefore this allows babies to immediately attend to their caregivers.

Researchers have found that babies prefer to look at the face-like configuration over the control paddle

This learning process increases and children learn to recognise the appearance of individuals over a span of approximately 7 months. At the age of 1 month, a baby can discriminate between its mother and a stranger (even though it's life is still a blur). This is thought to be due to basic stimuli, such as hair colour and design, as the child may not recognise its mother if she is wearing a hat or a swimming cap.

Infants aged 1-2 days have been shown to prefer faces with changing facial expressions (such as poking out of the tongue and mouths that open and close) that those of a stable facial expression. These infants will try to imitate the facial expression, showing that there may be an innate knowledge of facial expression and how to copy them. This knowledge improves with age.

Preschoolers have difficulty in fully recognising faces and identification of grossly distorted faces is poor. The facial recognition process improves gradually up to the age of 6 years, where it then begins to plateau.

Perceiving Facial Expressions (Top)

There are 6 basic human emotions recognisable from facial expressions. These are:

- Happiness

- Sadness

- Anger

- Disgust

- Surprise

- Fear

We are highly tuned to detect and recognise these, especially the angry face in the interest of survival against a hostile member of the social group.

Expressions can be identified from pictures alone by identifying the relative shapes and positions of key facial features and comparing them to our memory banks. However, in real life, we are more likely to use dynamic cues. This is because the human face is in continuous motion and this can be investigated using point light displays. The results of this have shown that we can recognise facial expressions (although not identify them) from facial movement alone, without the actual facial features being visible.

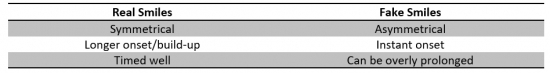

Additionally, to correctly interpret the expression, we need to know the previous and subsequent expressions as a small smile may just be a small smile, or the starting point of a huge grin. This can be seen when attempting to fake a smile. The table below demonstrates the differences between a real and a fake smile.

Despite the above characteristics making it easy to spot a fake smile from a photograph, unfortunately it is a little more difficult to do so during real life communication.

Categorising Faces (Top)

We categorise faces in several ways. The following few paragraphs will look at some of the ways that we categorise faces.

Age

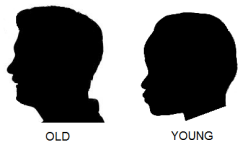

Cardioidal strain is imposed upon the bones of the skull through growth of soft and elastic tissue, which causes a distinctive pattern of change in the 3D structure of the head and face. This change means the head goes from being rounded as a child to a more elongated and flat shape with age. This is demonstrated in the image to the right.

Additionally, surface colouration of the skin and the hair, as well as texture changes of the skin and other body parts can also influence the perception of age.

Gender

Gender is also a way that humans categorise faces. Configuration of the nose relative to the face is very important in this judgement (even more important than the eyes). It has been found that the more protuberant (sticking out) the nose, the more masculine the face.

Humans are 95% accurate at judging gender, even if there are no cues from hair or cosmetics. There are a lot of local cues, such as width and spacing of the eyes and eyebrows, the shape of the cheeks and the shape of the nose, amongst many others.

Attractiveness

Across all of the cultural groups, the faces that are found to be the most average of all the faces tend to be the most attractive of them all. This is possibly due to the brains preference for "average" faces as they are easier to process, or even potentially that local blemishes and irregularities are smoothed out, leaving the face looking younger and healthier.

However, an average set of attractive faces is still more attractive than an average of unattractive faces. In some conditions, bigger eyes or lips can outdo the average features and be more attractive.

A really great website that explains this further is FaceResearch.org, where it delves further into face recognition and attractiveness - and even allows you to interactively composite your own average faces. I fully recommend you visit!

Facial Speech (Top)

All humans that can see will lip-read and speech can be deciphered much easier if the speaker's face is visible. Seeing aids in the perception of hard vowels, but it is not just the lips that we pay attention to. Studies have shown:

- 51% of words were read correctly when only lips were observed

- 57% of words were read correctly when lips and teeth were observed

- 78% of words were read correctly when observing the whole face

Lip reading must also be in symphony with the sound of the words produced. The human brain can only cope with an 80 ms delay between the sound being heard and the facial expression that produced it. This is why a poorly streamed or lagging television program can be hard to follow if the soundtrack and the picture are synchronised.

When lip movement and sound do not match, we witness the McGurk effect. This is where the lip-reading actually causes us to perceive a different sound to what is being said. For instance, a man saying "Ba Ba Ba" on a soundtrack but making the mouth movements for saying "Fa Fa Fa" will cause us to interpret the sound as "Fa Fa Fa", even though we are actually hearing "Ba Ba Ba". This example can be seen in the YouTube clip from the BBC Horizon: Is Seeing Believing, a clip that I have embedded below.

See the McGurk Effect in Action (Source: BBC / YouTube)

Gaze Perception (Top)

Humans are highly sensitive to the direction of somebody's gaze. This is important in a social situation as it enables us to determine where a person is looking. When we talk, we would like the person to look right at us, so discrepancies on gaze towards us during this may indicate the person is avoiding making eye contact due to lying or boredom, but direct eye contact can help show attention is being paid. Furthermore, for survival or mating purposes, direct eye contact with a stranger can attract attention by intimidation or a show of interest.

Humans are sensitive to gaze shifts accounting to one second of arc, which is highly sensitive. A high proportion of people can tell if you are not looking directly at their eyes and looking at another point on their face.

Facial Recognition as a Holistic Process (Top)

Components of a face are much less important than the image of the face as a whole. With the face inversion effect (demonstrated in the picture below) it is easily seen that facial expressions are harder to interpret when the face is upside down . The two faces are identical, with the face on the left looking relatively normal and happy, but when the face is rotated to be the correct way up, you can see the facial features look completely different and provide a different story. Furthermore, our ability to recognise faces is reduced when the face is upside down.

Difference in perceived expression in the face inversion effect

Additionally, humans also struggle to recognise the face if the image is in reverse contrast (i.e. in a photo negative, as explained earlier in the article) or halved and combined with another face.

Physiology of Recognition (Top)

Faces are perceived in the "face areas" of the fusiform gyrus (which extends over the inferior aspect of temporal and occipital lobes) on the basis of the outputs from the primary visual cortex. These areas were identified via Visual Evoked Potential (VEP) and Positron Emission Tomography (PET) studies.

Physiology of Object Recognition

Essentially object recognition follows the pathway set out in the diagram below:

The cells in the inferotemporal areas (PIT and AIT) contain cells sensitive to specific shapes, with each of these being grouped into columns. Neurones start sensitive to simple geometric shapes but become more sensitive to biological and complex shapes as it progresses through area IT. This leads to neurones that respond very strongly to faces, hands and other complex objects.

Physiology of Face Recognition

A very high percentage (10%) of cells in area IT show preference for faces and the neurones that are sensitive to faces have no response to any other stimuli. Responses of the cells are sensitive to the spatial configuration of the face and this response is reduced if the image of the face is scrambled.

Some cells respond more strongly to the faces of specific people and this forms the basis for selective identity. However, the same face will excite many cells, so it has been suggested that it is more likely that a recognition of a face is coded by a pattern of activity within a population of cells. It is not currently believed that there is a neurone for each face we may recognise.

It is also believed that area IT is important in face recognition as damage to the areas IT can cause agnosias (the loss of the ability to recognise face/objects/shapes/colours/sounds/smells etc even though the sense is not defective and there is no significant memory loss). Selective deficits to area IT can cause an inability to recognise objects or classes of objects and these are known as visual agnosias.

Prosopagnosia is the scientific term for face blindness, where the afflicted cannot recognise faces that are unfamiliar, familiar or even their own face. They can see all of the features that make up the face and classify it as a male or a female face along with the race, but the face will have no meaning to them. However, recognition of friends and family is possible when the person speaks and they can even identify them by the clothes that they are wearing. This is thought to be due to an inability to compare the perceptual representation of the face to those stored in memory that would allow for their identification.

Lesions in the right hemisphere of IT cause a greater impairment in face processing. In healthy individuals, faces shown to the left visual field are recognised much more quickly than those faces shown in the right visual field. Due to many object identification actions occurring in area IT, it can occur with other forms of agnosias, such as achromatopsia - the inability to distinguish between colours, even though the person has no congenital or acquired colour defect.

THIS CONCLUDES THE UNIT ON FACIAL RECOGNITION, EXPRESSIONS AND SPEECH

RETURN TO TOP OF PAGE - UNIT 5: WORD RECOGNITION AND READING